Note: This page exists as an archived version. The finalized project is available at the link at the top of the page and here.

Open Snapchat to take a selfie. Fix your hair, tilt your chin just so, perhaps parlay a smize and — No no, pause. You realize now that this is the perfect moment. This is the moment where you take a selfie such that you become another iteration of that canonical dog-eared millennial archetype.

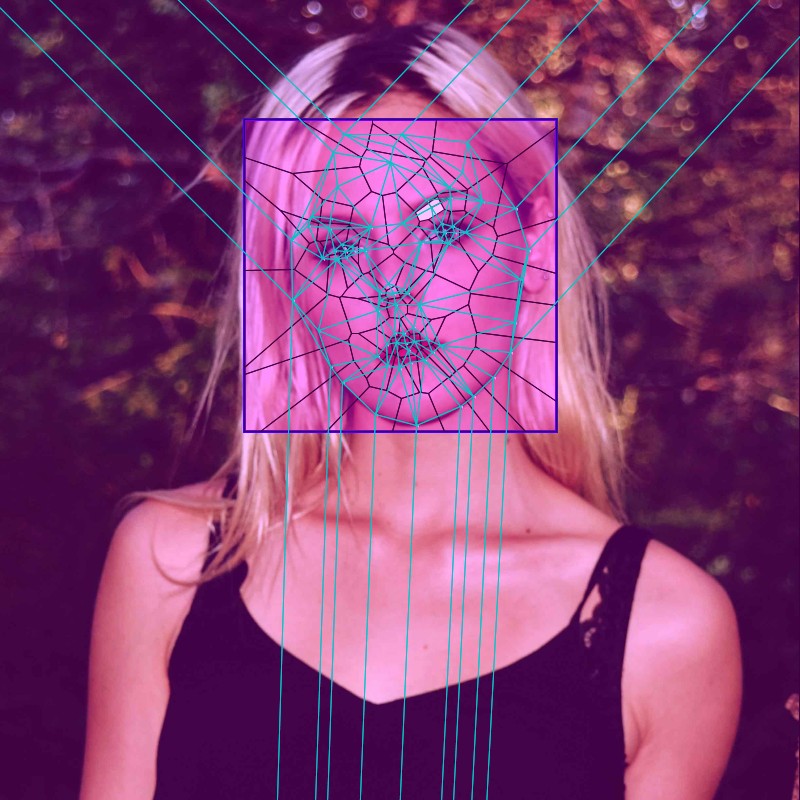

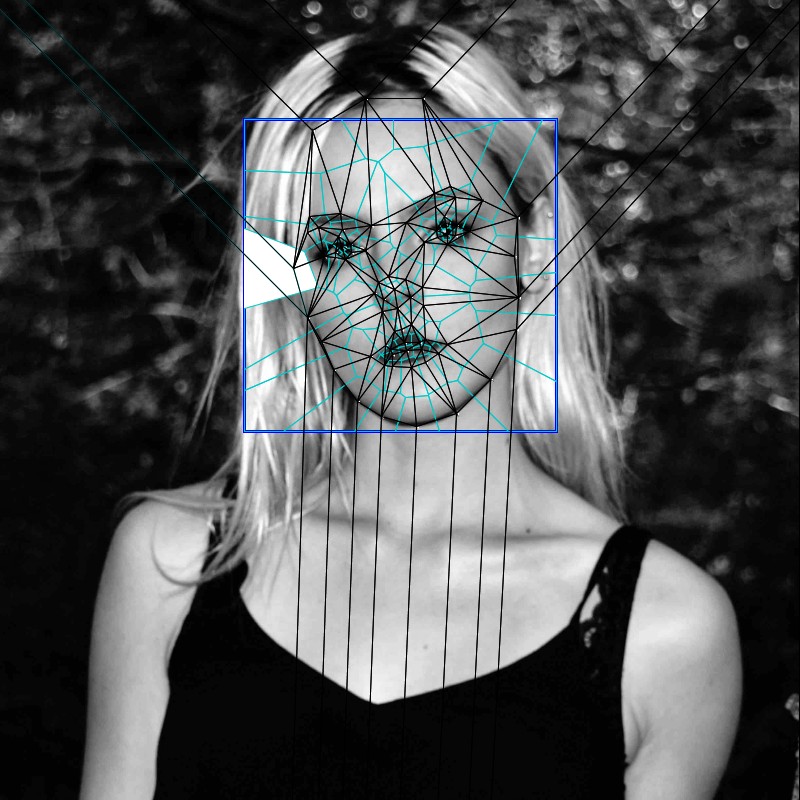

Okay, reposition, prep that puppy pout and tap the screen. And then, there, in that brief moment in the interim, between pressing the screen and when a cascade of filter options appear, it appears to you, like a flock of magical realist butterflies in Macondo: a triangulation.

At first glance, it is only a hallucinatory splice of memory from a Computational Geometry lecture, long ago. But no, it is truly there: the revelation of the structure that is the topology of your face.

There is a brief moment in the interim, between pressing the screen and when a cascade of filter options appear, when the Snapchat screen makes itself vulnerable — or seemingly so. Perhaps the algorithm (point location, triangulation, alpha mask generation, etc.) is simply not fast enough to go unnoticed. Or perhaps, in hinting a glimpse at your triangular decomposition, a user feels that tantalizing endorphin-surging rush of peeking behind the curtain as the Wizard soliloquizes about the mysteries of the “cloud."

Or perhaps, like most users, you never noticed the triangular mesh on your hurried way to transfigure yourself into some canonical dog-eared millennial archetype. That’s about to change.

A triangulation is a maximum planar subdivision - a breaking of a polygon or a point set into triangles. Segmentation of a larger space into manageable pieces allows for a myriad of complex operations — point location (i.e. google maps), an optimized distribution of vantage points (i.e. art gallery guarding), or, in this case, the creation of a mesh of a face for computer graphics rendering.

As with all divisions of space, there exists a multiplicity of means to deconstruct a face. This project was commenced from an initial search for an optimal facial triangulation.

What does it mean to have an optimal facial triangulation? In the context of this project, it is a fast yet reliant algorithm to render an accurate mapping of facial landmarks (eyes, ears, mouth and nose) for later processing. We can think of it this way — in order to allow for persistance of a projected filter onto a face, the algorithm, for detection of landmarks and their subsequent division via relationship-denoting edges, must return a triangulation that is rooted in the variable nature of a facial construction.

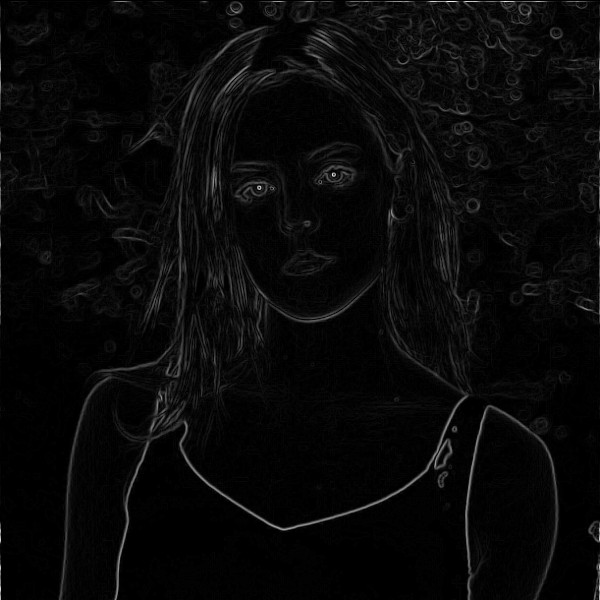

A facial triangulation algorithm must detect facial landmarks and then triangulate that representative point set such that graphics mappings can be persistently implemented, irrespective of shifts in light, direction or background noise in the camera image capture.

This project seeks to flesh out what is known as algorithmic transparency — herein defined as a clear and meticulous dissection of the numerous steps of an algorithm, for the goal of elucidating the structure and decisions of a perhaps-opaque-seeming technology. In short, to allow a user to peek behind the wizard’s curtain.

Why?

We delineate spaces without thinking. This is a way to think about how we create referential landmarks to ourselves and to other points. This is an exploration of space, divisions of space and the landmarks we forget to notice on the landscapes that we most frequently apotheosize and excoriate concurrently — our own faces.

A copy of the more formal research paper that resulted from this project is available in PDF format via the link at the top of this page